How to Detect AI Generated Content A Complete Guide

Learn how to detect AI generated content with this complete guide. We cover AI detectors, linguistic clues, and manual verification to spot AI writing.

Spotting AI-generated content isn't about finding a single "gotcha" moment. It’s more like detective work—a layered process that blends tech-savvy analysis with good old-fashioned human intuition. You start by looking for the subtle tells in the language itself, things like eerily perfect grammar or a complete lack of a distinct voice, and then use that as a jumping-off point for a deeper dive.

AI Is Everywhere, So How Do We Spot It?

Let's face it: AI-generated text has flooded the internet. It's in marketing emails, news snippets, blog posts, and even product reviews. What started as a novelty has quickly become a massive part of our daily information diet, making it harder than ever to know if you're reading something written by a person or a machine.

This isn't just an academic problem anymore; it has real-world consequences. With a flood of powerful AI tools for content creators now available to everyone, the sheer volume of automated content is staggering. The lines between authentic human expression and machine-generated text are getting blurrier by the day.

Why We Need to Get Good at This

Being able to identify AI-written text is no longer a niche skill. It’s becoming essential for anyone who values authenticity and quality.

- For Educators: It's about ensuring students are actually learning and not just offloading their assignments to a bot.

- For Brands: It's about protecting a unique brand voice and making sure your content doesn't sound generic and robotic.

- For SEOs & Content Folks: Search engines are cracking down on low-quality, unhelpful content. Relying too heavily on unedited AI is a recipe for content that just doesn't connect or rank.

- For Everyone: It's a critical tool in the fight against misinformation, helping us spot propaganda and fake news campaigns that are often powered by algorithms.

The problem is bigger than most people realize. In the 2023-2024 school year alone, Turnitin scanned 200 million student papers and discovered that 11% had at least 20% AI writing. Even more shocking, 3% of them—that's 6 million papers—were more than 80% AI-generated. That shows you just how quickly these tools have been adopted.

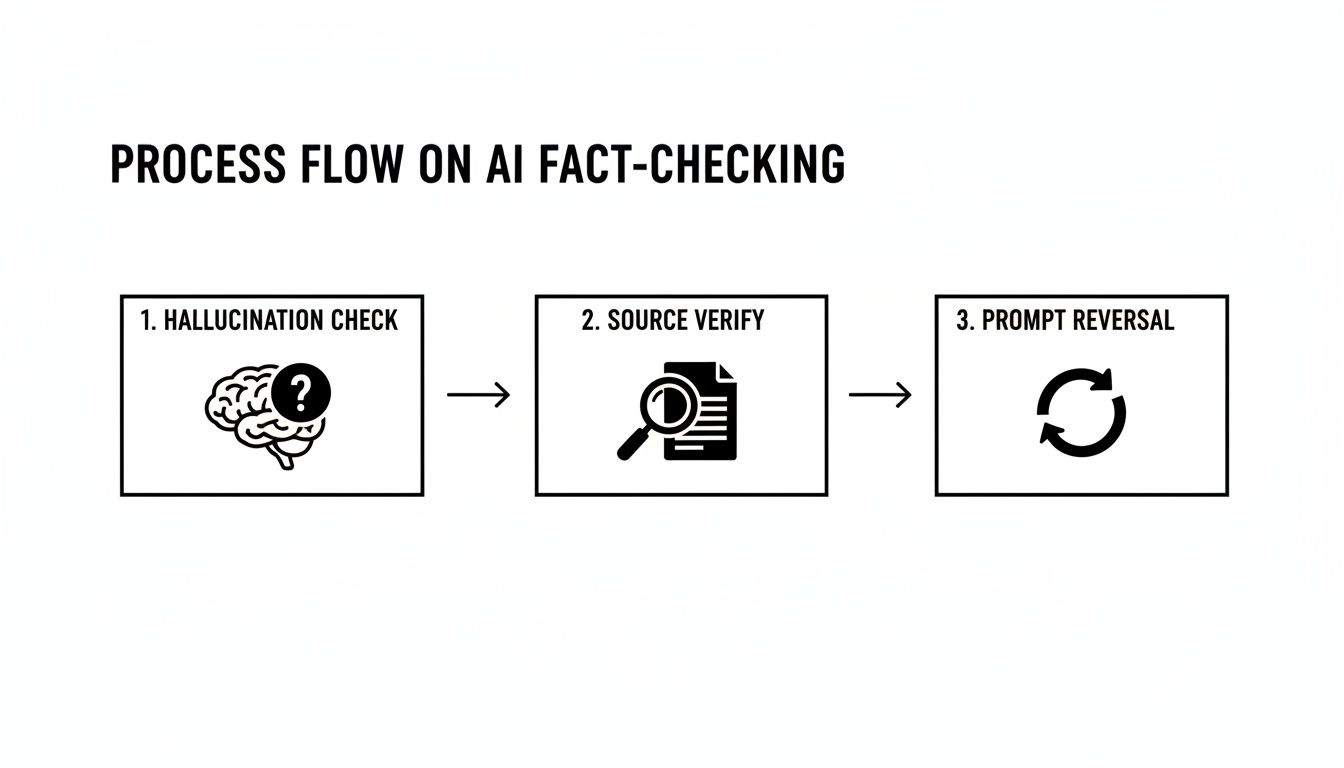

A Quick Look at the Strategy

Before we get into the nitty-gritty, let's zoom out. The best way to approach AI content detection is by combining three core methods. Each one gives you a different piece of the puzzle.

| Core AI Content Detection Methods at a Glance | ||

|---|---|---|

| Detection Method | What It Looks For | Best For |

| Linguistic Analysis | Unnatural phrasing, repetitive sentence structures, lack of personal voice, and overly formal tone. | Quick, initial gut checks and spotting obvious AI tells without any special tools. |

| AI Detection Tools | Statistical patterns, perplexity (randomness), and burstiness (sentence variation) common in machine-generated text. | Getting a fast, data-backed probability score to confirm or challenge your initial suspicions. |

| Manual Verification | Fact-checking claims, verifying sources, and looking for signs of human experience or "digital breadcrumbs." | In-depth analysis when you need a definitive answer, especially for high-stakes content. |

Relying on any single one of these methods is a mistake. A tool might give you a false positive, and even a human eye can be tricked. But when you use them together, you can build a much more reliable and confident assessment.

It's All About the Blended Approach

Here’s the thing: you can’t just plug text into a tool and expect a perfect answer. The most reliable AI detectors are still a work in progress, and they often struggle to keep up with how fast the generation models are improving.

The real skill isn't just running a scan; it's about developing an ear for what sounds real. You start to notice the absence of a human touch—the lack of a quirky opinion, the perfectly polished but emotionally hollow prose.

This guide is designed to help you build that skill. We'll walk through how to spot the linguistic giveaways, how to use AI detectors as a helpful guide (not a final judge), and how to perform manual checks that a machine could never do. By combining these techniques, you’ll be able to confidently figure out what’s real and what’s not.

Spotting the Telltale Signs of AI Writing

Before you reach for a detection tool, take a moment. The best scanner you have is your own critical eye. AI models are trained on an unbelievable amount of human-written text, but the final product often feels just a little… off. Learning to recognize these quirks is your first, and frankly, most reliable line of defense.

The biggest giveaway is often a polished yet completely soulless tone. The writing is grammatically perfect, the logic flows, and nothing is remotely offensive. While that sounds great in theory, it’s often missing the personality, the unique voice, and the subtle imperfections that make human writing worth reading. It feels like content created to check a box, not to connect with a person.

Unpacking the Linguistic Clues

The first thing I always look for is a weirdly consistent and predictable rhythm. AI-generated text often falls into this monotonous, repetitive cadence. You'll see sentence after sentence of roughly the same length and structure, which is something a human writer naturally avoids.

Another dead giveaway is the clumsy overuse of certain transition words. AI models have a few favorites they lean on far too heavily, deploying them in a way that feels forced and unnatural.

Keep an eye out for these telltale words:

- "Moreover": This is a classic. It’s often used to tack on another point where a human would just say "Also" or start a new sentence.

- "Furthermore": Much like "moreover," this one can make the writing feel overly formal and stuffy.

- "In conclusion": AI loves to wrap things up with a neat little bow, but this phrase often feels formulaic and out of place in anything but a school essay.

- "Delve into": AI is always "delving into" topics. It’s a phrase that tries to sound profound but usually comes off as dramatic.

This happens because the AI isn't making a stylistic choice; it's just regurgitating patterns it learned from its training data. When every other paragraph starts with a grandiose transition, you can be pretty sure a human wasn't behind the keyboard.

A key idea here is something called burstiness. It’s the natural ebb and flow of sentence length in human writing. We mix short, punchy statements with longer, more descriptive ones. AI is terrible at this. It tends to produce text with very low burstiness—a flat, uniform wall of sentences that all feel the same.

The Problem of the Neutral, Know-It-All Tone

Let's be real: human writers have opinions, biases, and a distinct voice. We go on tangents, share personal stories, and even make the occasional grammar mistake. AI-generated text, on the other hand, is usually stripped of any real personality. It adopts this sterile, encyclopedic tone that presents information without any perspective or feeling.

The result is content that might be factually accurate but is emotionally vacant. You won't find a strange but memorable metaphor, a moment of vulnerability, or a bold opinion. The writing feels sanitized, as if it were approved by a committee terrified of offending someone.

Just look at the difference:

| AI-Generated Paragraph | Human-Written Paragraph |

|---|---|

| The integration of cloud computing offers significant benefits for businesses, including enhanced scalability, cost efficiency, and improved data accessibility. This paradigm shift allows organizations to adapt quickly to market demands. | When we moved our startup to the cloud, it was a total game-changer. Suddenly, we weren't blowing our tiny budget on server maintenance, and I could finally access our customer data from a coffee shop without wanting to pull my hair out. |

The AI version is technically correct but completely sterile. The human version gets the same idea across but grounds it in relatable experience and emotion. It's just more interesting to read. Many services trying to create "undetectable" AI focus on faking these human qualities, which is why a trained eye is so crucial. You can see more on this by exploring how undetectable AI works and the constant cat-and-mouse game it creates.

Identifying Repetitive Ideas and Generic Fillers

Another subtle but common clue is circular logic. An AI will often introduce an idea, explain it, and then summarize it using almost the exact same language, sometimes all within the same paragraph. It's trying to hit a word count or cover a topic from every angle, not build a tight, compelling narrative.

You should also be on the lookout for generic filler that adds words but no real substance. Phrases like "It is important to note that..." or "This plays a crucial role in..." are classic fluff. A human writer with a clear point to make is usually more direct. Training yourself to spot these patterns of repetition and fluff will get you most of the way there, long before you ever need an automated tool.

Using AI Content Detectors the Smart Way

Once you've done your own read-through and spotted some potential red flags, the next logical step for most people is to plug the text into an AI detector. And that’s a good move—as long as you treat these tools as investigative partners, not as judges handing down a final verdict.

The single biggest mistake I see is taking a score as gospel. An AI detector gives you a probability score, a useful signal to guide your investigation. It should never, ever be the final word. The technology is in a constant cat-and-mouse game with the AI models it’s trying to catch, and assuming it's infallible can lead to serious errors, from false accusations in a classroom to bad content decisions at work.

So, What Do the Scores Really Mean?

When a tool spits out "87% AI-Generated," it's not saying it has proof. It's making a statistical guess. The score simply means the text patterns align with what the detector has been trained to see as machine writing—things like low "perplexity" (how predictable the next word is) and "burstiness" (the natural rhythm and variation in human sentence length).

Think of it like a weather forecast. A 90% chance of rain doesn't mean it’s definitely going to pour, but it’s a very strong hint that you should grab an umbrella. A high AI score works the same way; it’s a sign that you need to dig deeper.

Key Takeaway: An AI detector's score is a prompt, not proof. Use it to flag text that needs a closer look, but always back it up with your own manual analysis and fact-checking. A high-stakes decision should never rest on a single scan.

The Accuracy Problem: Why One Tool Isn't Enough

Let’s be honest: AI detectors can be flaky. They're notorious for both false positives (flagging human work as AI) and false negatives (missing obvious AI content). Some research has even shown they’re more likely to misidentify text from non-native English speakers or writing on highly technical topics.

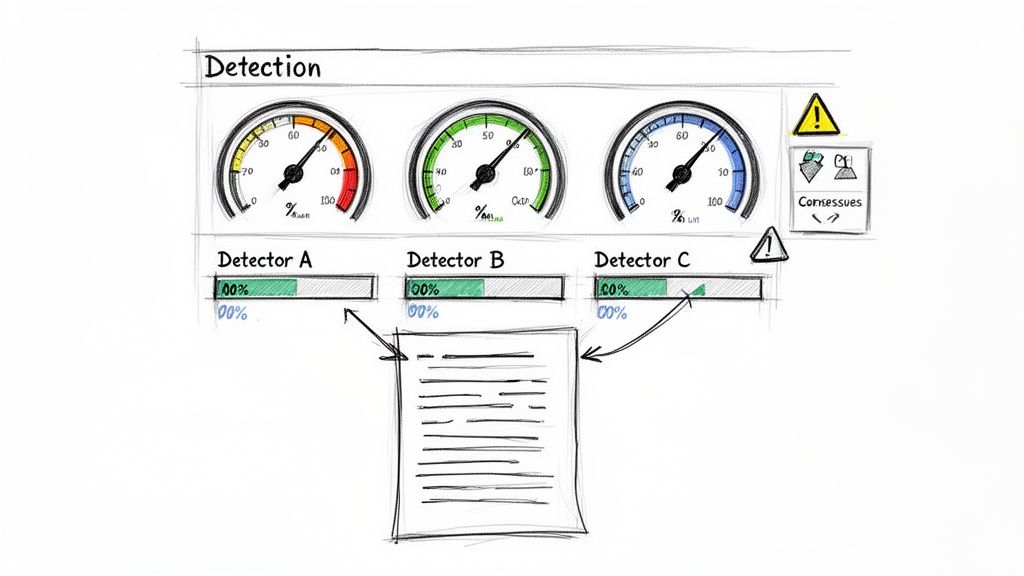

This is exactly why relying on just one tool is a recipe for disaster. Each detector uses a slightly different algorithm and was trained on different data. One might catch something another completely misses. Your best bet is to cross-reference.

Here’s a practical workflow:

- Run the text through at least two or three different detectors.

- Look for a consensus. If they all come back with high AI probability scores, your confidence in that assessment should be much higher.

- Investigate the outliers. If one tool says 95% AI and another says 10%, that's a huge red flag that the text is ambiguous. In this case, you have to lean much more on your manual verification skills.

This multi-tool approach gives you a more balanced and evidence-based view. You're no longer at the mercy of one flawed algorithm. For educators, the stakes are especially high; it's worth understanding how academic tools like Turnitin approach AI detection to see this in practice.

Comparing Popular AI Detector Strengths and Weaknesses

To get the most out of these tools, it helps to understand what they do well and where they fall short. There's no single "best" detector; they all have their place. A smart strategy involves using a combination of them to cover your bases.

| Detector Type | Primary Strength | Common Limitation | Best Use Case |

|---|---|---|---|

| Statistical Analysis Tools | Effective at identifying older AI models (like GPT-3) by analyzing word choice and sentence predictability. | Can be easily fooled by newer, more complex models and "humanized" or paraphrased AI text. | A good first-pass scan for catching less sophisticated AI-generated content. |

| Neural Network-Based Tools | Better at detecting nuanced patterns from newer models (GPT-4 and beyond) by analyzing deeper linguistic features. | Prone to false positives with highly structured or formal human writing (e.g., legal documents, scientific papers). | Cross-referencing results from other tools to confirm suspicions about advanced AI writing. |

| Watermarking & Provenance | Aims to embed an invisible signal into AI-generated content at the point of creation for definitive identification. | Not widely adopted yet, and any watermark can potentially be stripped or manipulated. | A potential future solution but not a practical method for most existing content today. |

Ultimately, layering these different approaches gives you a much clearer picture than relying on a single source of truth.

The Human Element Is Still Your Best Asset

We need these tools because, frankly, our own gut feelings aren't enough. One 2023 study found that humans are barely better than a coin toss at this, correctly identifying AI-generated text just 51.2% of the time. This gap in our perception is what has allowed hundreds of AI-powered "news" sites to pop up and spread junk across the internet. You can find the full research on human detection of AI-generated content at cacm.acm.org.

That statistic says it all. AI detectors are essential aids that compensate for our natural limitations. But they are just that—aids. The final call must always come from an informed person who can weigh all the evidence, from subtle linguistic tells to the data from multiple scanners, before making a final judgment.

Digging Deeper: Fact-Checking and Source Verification

Alright, so the linguistic analysis and the detection tools are giving you mixed signals. This is where the real detective work begins. We need to move beyond just the style of the writing and start probing its substance.

The biggest giveaway here is what we call AI "hallucinations." This is when a model confidently spits out fabricated facts, made-up quotes, or non-existent sources, presenting them as gospel. They can be incredibly tricky to spot because they look real. An AI can invent a research paper with a perfectly plausible title, assign it to a real expert in the field, and even claim it was published in a known journal. Hunting down these fabrications is one of the most reliable ways to unmask an AI.

What an AI Hallucination Looks Like in the Wild

Think of it this way: AI models don't know anything. They're just incredibly sophisticated prediction engines, guessing which word should come next. Sometimes, this process leads them to stitch together information that looks completely legitimate on the surface but has zero basis in reality.

It costs the AI practically nothing to generate this plausible-sounding nonsense. For us humans, though, the cost of manually verifying it can be significant.

Here are the classic red flags I always look for:

- Unsupported, Hyper-Specific Stats: You see a claim like, "83% of small businesses saw a direct increase in sales after implementing this strategy." It sounds authoritative, right? But if there's no link, no mention of the survey, no report cited—it’s highly suspicious.

- Vague, Hand-Wavy Attributions: Keep an eye out for phrases like "studies show," "experts agree," or "research indicates." A real writer, especially an expert, is usually keen to show their work and will cite their sources with more precision.

- Perfectly Crafted but Phantom Quotes: An AI might generate the perfect quote to summarize a point and attribute it to a well-known person. If you copy that exact quote and pop it into a search engine, you’ll often find it exists nowhere else on the internet.

My Go-To Verification Workflow

When a specific claim just doesn't feel right, don't let it slide. A structured approach is your best friend for quickly confirming or debunking it. This kind of investigative mindset is essential if content integrity is your goal.

- First, isolate the core claim. Pull out the single most specific, verifiable piece of information you can find. It could be a statistic, the title of a paper, or that direct quote.

- Next, use precise search queries. Don't just Google the general topic. Wrap the exact phrase or title in quotation marks. For instance, instead of searching for

cat sleeping studies, search for"The Socio-Economic Impact of Feline Nap Patterns, 2021". - Finally, go straight to the source. If an author or publication is named, navigate directly to their official website or Google Scholar profile. If the article isn’t listed there, it's almost certainly a fabrication. This process of cross-verification is a lot like what’s needed when you check for plagiarism; you're confirming the content's origin is legitimate.

A word of caution: Be wary of look-alike domains. A hallucinated source might be cited as coming from the "Journal of Advanced Economics," when the real, prestigious publication is the "American Journal of Economics." These subtle differences are designed to fool a quick glance.

Context is Your Secret Weapon

Beyond just checking individual facts, you have to zoom out and look at the whole picture. Does this piece of content actually make sense in its broader context?

Human-written work almost always carries a "digital fingerprint"—a history of expertise, a consistent viewpoint, and a trail of related work.

Start asking yourself these kinds of critical questions:

- Is it consistent with the author's work? If a famous marine biologist suddenly drops a highly technical article about cryptocurrency trading, that should raise an eyebrow.

- Does it fit the publication? A popular tech blog that out of nowhere posts a dense piece of legal analysis might be using AI to branch out without having the actual expertise on staff.

- Where's the personal experience? This is a big one. AI has no life experience. If a travel blog describes a trip in vivid detail but is completely devoid of personal photos, unique anecdotes, or sensory details that couldn't be scraped from a dozen other sites, you might be reading an AI's fantasy vacation.

The "Prompt Reversal" Test

Here’s one of my favorite advanced techniques, something I call the "prompt reversal test." It’s simple: you try to recreate the suspicious text yourself using a basic prompt in a tool like ChatGPT or Claude.

Let's say you come across a paragraph that just feels a bit too generic, too formulaic. Head over to an AI tool and give it a simple prompt like, "Write a paragraph about the benefits of content marketing for small businesses."

If the AI's output is eerily similar in structure, tone, and phrasing to the text you're investigating, that's a very strong sign the original was AI-generated. Human writing just isn't that predictable. This test is a fantastic way to sniff out low-effort, unedited AI content.

A Practical Workflow for Spotting AI-Generated Content

Putting theory into practice is where the real skill comes in. Having a solid workflow takes the guesswork out of spotting AI-generated content and turns it into a structured, repeatable process. This is how you blend your own gut feelings with the right tools to make a call you can actually stand behind.

Let’s walk through a real-world example. Say you're an editor looking over a freelance submission about historical gardening techniques. The writing is clean, the structure is solid, but something just feels… off. It’s a little too perfect, a little too flat.

Here’s the process I’d follow.

The Initial Gut-Check Read

First things first, just read the piece. Don't touch any tools yet. Get a feel for the text itself. Are there any of the subtle quirks or imperfections that make writing feel human? Be on the lookout for an overly consistent sentence structure or a sterile, almost encyclopedic tone that’s completely devoid of personality.

With our gardening article, you might notice that while the facts are there, it’s missing any real flavor. There are no personal stories, no sensory details, and no unique takes. The writer never mentions the smell of damp soil after rain or the pure frustration of a rabbit eating your prize-winning carrots—the kind of stuff a genuine gardener would almost certainly include.

A big part of this initial check is what some call "burstiness." Human writing naturally ebbs and flows, mixing short, punchy sentences with longer, more detailed ones. AI often churns out text with a monotonous rhythm, where every sentence is roughly the same length and structure. It just feels flat.

Cross-Reference with AI Detectors

After your own read-through, it’s time to bring in the machines. The key here is to run the text through two or three different AI detection tools. Never, ever rely on a single score. The goal isn't to get a definitive verdict from a tool; it's to look for a general consensus. If one says 95% AI and another says 20%, that tells you the text is tricky and needs a much closer human look.

For our gardening piece, let's imagine two of the three detectors flag it with a high probability of being AI-generated. That’s not proof, but it's a strong signal that backs up your initial gut feeling. Now you know it’s time to dig deeper.

Fact-Checking for Hallucinations

This is where you go on the hunt for AI "hallucinations"—those completely fabricated facts, quotes, or sources that sound plausible but don't actually exist. Pull out the most specific claims in the article. This could be a direct quote from a historical figure or a reference to a particular botanical study.

Now, head to your search engine and wrap that exact quote or study title in quotation marks. If you can't find a single other mention of it online, that’s a massive red flag.

This simple workflow is incredibly effective for this kind of deep-dive verification.

This process breaks down the core parts of manual fact-checking, turning a vague idea into a clear, actionable checklist you can use for any piece of content that crosses your desk.

Considering the Bigger Picture

Finally, take a step back and look at the context. Does this article even make sense coming from this author? If the freelancer’s portfolio is packed with deep-dives on blockchain and they suddenly hand in a flawless piece on 18th-century botany, that’s reason enough to be skeptical. This holistic view often provides the last piece of the puzzle. Building out a process like this is crucial if you want to detect AI content like a pro.

Even as the market for these detection tools is projected to skyrocket to $6.96 billion by 2032, their accuracy is still a huge question mark. One study found a popular tool only correctly identified 26% of AI-written text, and worse, it falsely flagged human writing 9% of the time. This kind of unreliability is a major headache, making a blended human-and-tool workflow absolutely essential.

Your Questions About AI Content, Answered

Trying to get a handle on AI-generated content can feel like trying to nail jello to a wall. The tech keeps changing, and with it, the questions about how to use it, spot it, and understand its real-world impact. Let’s cut through the noise and get straight to some of the most common questions I hear.

Can AI Content Be Made 100% Undetectable?

The short answer is: it's a cat-and-mouse game. There are sophisticated tools designed to "humanize" AI text by adding stylistic quirks and varying sentence patterns, making it tough for today's detectors to catch.

But honestly, focusing on "beating a scanner" is missing the point. The real goal should be creating something valuable and original. When you take an AI draft and heavily revise it—injecting your own insights, real-world examples, and a unique voice—it naturally stops looking like AI. The most "undetectable" content is just well-written content that’s been given a serious human touch.

The real challenge isn't about fooling an algorithm; it's about creating something a human actually wants to read. If you’re just trying to pass a detector, you've already lost focus on what makes content great.

What Are the Real SEO Risks of Using AI Content?

Google has been pretty direct about this: they care about the quality of the content, not how it was made. The genuine SEO risk isn't from using AI, but from using it to churn out generic, low-effort, or spammy articles at scale. Raw, unedited AI output often lacks the nuance, firsthand experience, and factual depth needed to actually help a reader.

That's the kind of thin content that will struggle to rank and could, over time, damage your site's credibility.

To stay on the right side of this, make sure any content you produce, AI-assisted or not, lines up with Google's E-E-A-T guidelines:

- Experience: Does the writing show you've actually done the thing you're talking about?

- Expertise: Is the information correct and backed by real knowledge?

- Authoritativeness: Is your site a go-to source on this topic?

- Trustworthiness: Is the content transparent, honest, and reliable?

Think of AI as a research assistant or a first-draft machine, not the final author. The work isn't done until it's been edited, fact-checked, and enriched with human perspective.

Are AI Detector Scores Definitive Proof?

Not even close. An AI detector score is not legally binding or accepted as definitive proof anywhere that matters, from academia to the professional world. These tools are notorious for getting it wrong, producing both false positives (flagging human writing as AI) and false negatives (missing obvious AI text).

A detector score should only be seen as a starting point—a red flag that tells you to dig deeper and investigate manually. A high AI probability score is a signal to look closer, not a final verdict. Relying solely on a tool's output without any other corroborating evidence is a recipe for making unfair and inaccurate judgments.

How Can I Ensure My Own Writing Avoids AI Flags?

If you want your writing to sound human, embrace what makes human writing unique: personality and a little bit of imperfection.

Play with your sentence length. Mix short, punchy statements with longer, more descriptive ones. This creates a natural rhythm, sometimes called "burstiness," that AI models often struggle to replicate on their own.

More importantly, inject your own voice. Tell a short story. Use a specific example from your own life instead of a generic one. One of the best tricks in the book is to read your writing out loud. If it sounds clunky or unnatural, rewrite it until it flows conversationally. Ditch the robotic transition words like "moreover" or "in addition," and just write like you speak. That's the best way to avoid being mistaken for a machine.

Ready to transform your AI drafts into polished, human-quality content that connects with your audience? Rewritify is an AI rewriter that helps you refine text to be clearer, more original, and ready for your readers. Stop worrying about sounding robotic and start producing content that reflects your true voice. Try Rewritify for free today!

Relevant articles

Learn how to pass AI detection by ethically humanizing AI-generated drafts. Our guide covers rewriting, editing, and using tools to create authentic content.

Asking 'does undetectable AI work?' This guide reveals real test data on bypassing AI detectors. See if you can actually fool systems like Turnitin and Google.