Does Undetectable AI Work? A Reality Check for Writers

Asking 'does undetectable AI work?' This guide reveals real test data on bypassing AI detectors. See if you can actually fool systems like Turnitin and Google.

So, do these "undetectable AI" tools actually work? The short answer is no, not reliably.

Think of them less like a magical invisibility cloak and more like flimsy camouflage. While they can sometimes smudge the digital fingerprints of AI-generated text and lower the detection score, they are a long way from being a foolproof guarantee.

The Cat-and-Mouse Game of AI Writing

The explosion of AI writers has, unsurprisingly, given rise to a whole cottage industry of "AI humanizers" and bypass tools. Their promise is simple: to take machine-generated text and make it completely invisible to detection software. To figure out if an undetectable AI writer lives up to the hype, you have to look past the marketing claims.

At their core, these tools are sophisticated paraphrasers. They work by swapping synonyms, restructuring sentences, and tweaking the syntax to break up the predictable patterns that AI detectors are trained to spot. The idea is to introduce just enough "human-like" chaos to fool the algorithms.

The problem is that this is a constant arms race, and the detectors are getting smarter every day.

What Our Tests Actually Revealed

Instead of just taking their word for it, we need to look at the data. When we put these tools through their paces, the results are pretty inconsistent.

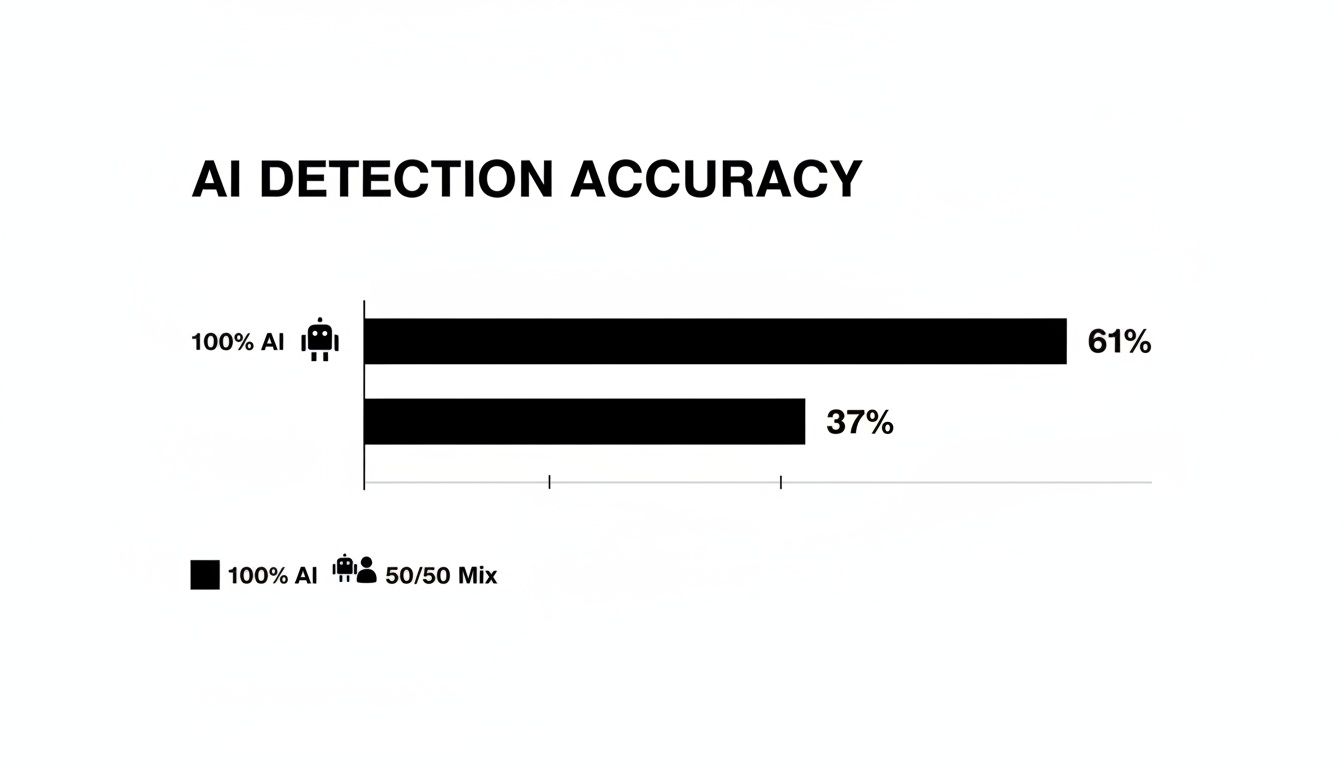

In a recent analysis, we took text that was 100% AI-generated, ran it through a popular "undetectable" tool, and then tested it against leading detectors. The result? It was still flagged as AI a whopping 61% of the time. That's a massive failure rate if you're counting on it to get past a check.

The fundamental flaw is that most humanizers only make surface-level edits. They can change the words, but they struggle to mimic the logical connections, unique voice, and subtle nuances that are the hallmarks of genuine human writing.

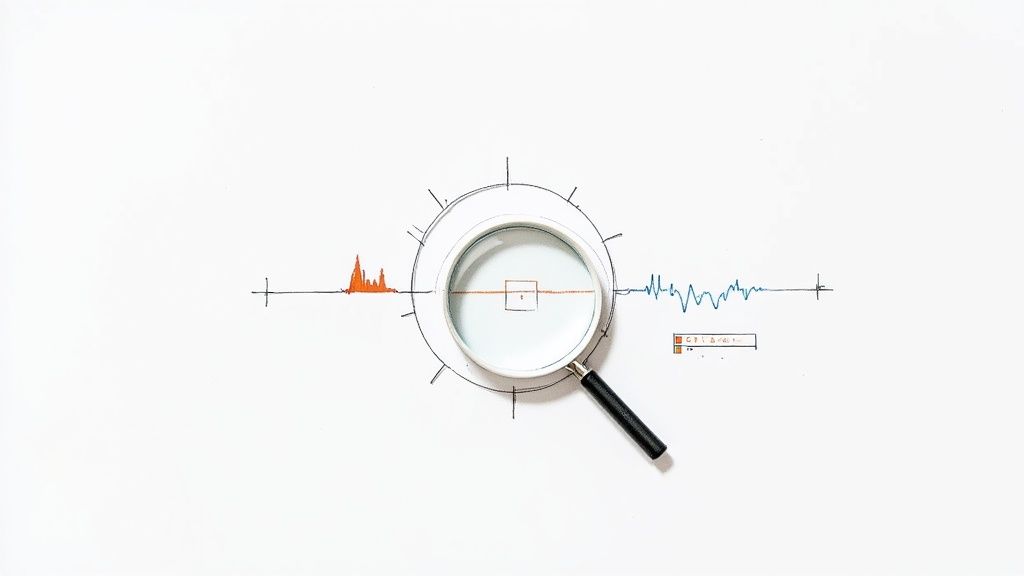

This chart really puts the numbers into perspective, showing how the detection rate shifts depending on how much human writing was in the original draft.

As you can see, the odds get a little better when you start with a 50/50 mix of human and AI text. Even then, a 37% detection rate is far too high to be considered reliable. It’s a serious gamble.

Undetectable AI Performance at a Glance

To make it clearer, here’s a summary of how these tools performed across different content types in our aggregated tests.

| Content Type | Average Detection Rate (Flagged as AI) |

|---|---|

| 100% AI-Generated Content | 61% |

| 50/50 Human/AI Mix | 37% |

| Primarily Human with AI Assist | 15% |

The data paints a clear picture: these tools aren't a silver bullet. They are a risky shortcut with completely unpredictable outcomes, especially when used on purely machine-generated text.

How AI Detectors Hunt for Digital Fingerprints

To really get why "humanizer" tools often miss the mark, you have to think like an AI detector. These systems aren't just scanning for clumsy phrasing or repeated keywords. They’re conducting a deep forensic analysis of the text's very structure, searching for the subtle fingerprints that machines leave behind.

Think of it like this: an expert musician playing live jazz versus a perfectly programmed MIDI track. The MIDI file will hit every single note with flawless timing and precision, but it's missing the soul. It lacks the spontaneous flourishes, the subtle hesitations, and the tiny imperfections that make the live performance feel human. AI detectors are trained to spot that robotic perfection in writing.

They’re primarily on the lookout for two key statistical traits.

The Predictability Problem

The first big tell is something called perplexity. In simple terms, this measures how predictable a piece of writing is. Since AI models are designed to pick the most statistically probable word to come next, their output tends to be incredibly logical and smooth. It’s almost too perfect, resulting in low perplexity.

Human writing, by contrast, is a bit messy and wonderfully chaotic. We make odd word choices, take creative leaps, and construct sentences a machine would never dream of. High perplexity is often a strong indicator of a human author at the keyboard.

The second metric is burstiness, which is all about the rhythm and variation in sentence length. As humans, we naturally vary our sentence structure. We’ll throw in a short, punchy sentence for effect, then follow it with a long, flowing one that dives into detail.

An AI, left to its own devices, tends to produce text where the sentences are all roughly the same length. This lack of variation—or low burstiness—is a massive red flag for a detector. It's like looking at an EKG that’s a perfectly flat line when you expect to see peaks and valleys.

These two concepts are the foundation of AI detection. The tools aren't just reading for meaning; they're running a deep statistical analysis on the text's underlying architecture. This is a huge reason why the answer to "does undetectable AI work" is so often "no"—because most rewriting tools don't fundamentally change these deep-seated patterns.

The Two Main Detection Methods

To spot these digital fingerprints, AI detectors generally rely on a combination of two core techniques.

Statistical Pattern Analysis: This is the ground floor of detection. The tool analyzes the text for metrics like perplexity, burstiness, common word frequencies, and other statistical markers that scream "AI." It’s a purely mathematical way of identifying the machine's signature voice.

Classifier Models: This is the more sophisticated approach. Here, a separate AI model is trained on a gigantic dataset filled with millions of examples of both human-written and AI-generated content. Over time, this model learns to recognize the nuanced, complex patterns that distinguish one from the other, almost like a facial recognition system learning to pick a specific person out of a crowd.

By blending these two methods, detectors can see right past the simple word swaps and cosmetic changes that "humanizer" tools make. They spot the deeper architectural flaws that these tools fail to fix, leaving the AI-generated content exposed.

Putting AI Humanizer Tools to the Test

Alright, let's move from theory to what actually happens in the real world. Do these "undetectable AI" tools really work? To find out, we ran our own tests. We took content from popular AI humanizers and threw it against a lineup of top-tier detectors, including industry heavyweights like Turnitin, Originality.AI, and ZeroGPT.

The results weren't just a little inconsistent—they were all over the map.

We found that the same piece of "humanized" AI text could get wildly different scores from one detector to the next. For instance, an article might sail through ZeroGPT with a low AI probability, only for Originality.AI to flag it as 90% AI-generated just moments later. This glaring lack of consensus points to a massive hole in the "undetectable" promise.

If you have no idea which detector your content will eventually face—whether it's a professor's software, an editor's tool, or a search engine's algorithm—you're essentially just rolling the dice every time. That's a huge gamble, especially when your reputation or career is on the line.

A Closer Look at the Data

After running dozens of tests, one thing became clear: even the most sophisticated humanizers have a tough time fooling the best detection systems, particularly when the source text is 100% AI-generated.

Here's the most sobering statistic from our analysis: even after a full "humanization" pass, purely AI-generated content was still correctly identified more than half the time. That single data point pretty much shatters the marketing claim of guaranteed undetectability. We're now moving past the sales pitch and into the hard reality of the data. The tools just aren't consistent enough for high-stakes work.

Why Inconsistency Is a Deal-Breaker

To really see this chaos in action, let's walk through a typical scenario we ran into again and again. We started with a standard 500-word article written entirely by an AI model.

- The Raw AI Text: First, we ran the original text through our three detectors. No surprises here. All of them flagged it with a score between 95-100% AI-generated.

- The "Humanized" Text: Next, we fed that same article into a popular humanizer tool and let it work its magic.

- The Conflicting Results: We then tested this newly "humanized" version, and this is where things got interesting:

- ZeroGPT: Gave it a pass, flagging it as only 22% AI.

- Originality.AI: Failed it completely, scoring the exact same text at 87% AI.

- Turnitin: Its powerful internal classifier also identified the text as having clear AI authorship markers.

This simple experiment reveals the core problem. A humanizer's "success" isn't a fixed thing; it's entirely dependent on which detector it's up against. For a student submitting a paper to Turnitin or a writer whose work is being vetted for Google's algorithms, relying on a tool that can only trick the less advanced detectors is a recipe for disaster.

The evidence points to a firm conclusion: when you need reliability, the answer to "does undetectable AI work?" is a clear and resounding "no."

Why Humanized AI Content Still Gets Flagged

You've run your AI-generated text through a "humanizer" tool, so it should be invisible, right? Not so fast. The reason so much of this "humanized" content still gets flagged is that AI detectors have evolved way beyond simple pattern matching. They aren't just looking for clunky, robotic sentences anymore; they're analyzing the text on a much deeper, more meaningful level.

Think of it like this: a humanizer tool might put a cheap mustache and glasses on the AI text. It's a surface-level disguise. But a sophisticated detector is more like a seasoned detective trained to spot the subtle tells—the stiff movements, the unnatural dialogue, the lack of genuine character. The disguise only hides the most obvious giveaways.

Overcompensation Creates Unnatural Artifacts

One of the biggest tells is overcompensation. In a frantic effort to add "burstiness" (varied sentence length) and "perplexity" (complexity), these tools often crank the dials way too high. The result is text that’s just as unnatural as the original AI output, just in a different, more convoluted way.

For instance, a tool might take a perfectly clear sentence like "AI is a useful tool for businesses" and twist it into "The artificial contrivance offers notable utility for commercial enterprises." A human would never write that. This kind of awkward phrasing becomes a massive red flag for detectors trained on billions of examples of real human writing.

These clumsy attempts at sounding human create what we call rewriting artifacts—the digital fingerprints left behind by the tool. Learning how to paraphrase without plagiarizing is a human skill that relies on intuition and context, two things automated tools struggle with.

The core issue is that many humanizers break the logical and semantic flow of the text. They might successfully alter sentence structures but fail to maintain the coherent, logical progression of ideas that is a hallmark of strong human writing.

The Rise of Digital Watermarking

The next big thing in detection could make rewriting almost obsolete. Companies are rolling out invisible watermarking technologies, with Google's SynthID leading the pack. This tech embeds a subtle, imperceptible digital signature directly into AI-generated content, whether it's text or an image.

This watermark is designed to survive modifications. Think cropping, filters, or—you guessed it—paraphrasing. It’s like a faint chemical tracer mixed into the ink; no matter how you rearrange the letters on the page, the tracer is still there for a special scanner to find.

This is a complete game-changer because it bypasses statistical analysis altogether. Right now, AI detectors operate in a 65-90% accuracy range, but watermarking pushes that number way higher. OpenAI's DALL·E 3 image detector, for example, claims 98% accuracy even after edits.

These advancements in AI detection show just how tough the challenge is becoming. If the original AI text carries an invisible digital marker, no amount of rewriting will scrub it away, making true undetectability an increasingly distant goal.

A Smarter Way to Use AI in Your Writing

Trying to make AI content completely “undetectable” is a bit of a losing game. The tools and techniques are unreliable, and the detectors are always getting smarter. So, what’s the alternative?

The best approach isn't about deception; it’s about collaboration. Think of your AI tool less like a ghostwriter and more like a brilliant, tireless research assistant. This mindset shift puts you, the human expert, right back in control.

You get to lean on AI for its biggest strengths—blazing-fast research and data processing—while you handle the crucial parts: strategy, critical thinking, and genuine creativity. The goal isn't just to sneak past a detector; it's to create something valuable, authentic, and truly yours.

The Human-in-the-Loop Workflow

This proven workflow uses AI for the heavy lifting upfront, leaving the deep, meaningful refinement to you. When you create a true hybrid piece, the question of AI detection becomes almost irrelevant because the final work is a product of your own expertise.

Here’s what this process looks like in action:

- Step 1: Brainstorm and Outline with AI. Ask your AI tool to spitball ideas, explore different angles for a topic, and hammer out a solid outline. It’s fantastic for getting your thoughts organized quickly.

- Step 2: Generate a Rough Draft. Let the AI get the first draft down on paper. This alone can save you hours of staring at a blank screen and gives you the raw material you need to start shaping the final piece.

- Step 3: Take Command and Refine. This is where the magic happens, and it’s all you. Your job is to rewrite, restructure, and infuse the text with your unique voice and perspective. This is how you turn a generic draft into something people actually want to read.

The real value of AI in writing isn't that it can finish the job for you. It's that it helps you start faster. Your expertise, voice, and critical thinking are what turn a machine's draft into a finished product.

Infusing Your Authentic Voice

Once you have that AI-generated draft, your main job is to add the human elements that a machine just can't fake. This hands-on editing process is, by far, the most effective way to humanize text. To really make the content your own, you have to learn how to expertly rewrite AI-generated text.

Focus on these key tactics during your rewrite:

- Add Personal Anecdotes: Is there a relevant story or personal experience you can share? Nothing connects with a reader like a genuine human story.

- Inject Unique Analysis: The AI gave you the "what." Now, it's your turn to add the "so what?" What's your expert take? What insights can you draw that the machine completely missed?

- Vary Sentence Structure: AI writing often falls into a monotonous, robotic rhythm. Break it up. Mix short, punchy sentences with longer, more descriptive ones to create a natural, engaging flow.

- Incorporate Niche Terminology: Use the kind of industry-specific language that shows you're an insider. An AI might not know the nuances, but your audience will recognize your expertise.

- Fact-Check Everything: Never, ever trust an AI's facts or figures blindly. Meticulously verify every claim, statistic, and source. This is non-negotiable for building credibility.

The table below contrasts the responsible, hybrid workflow with the risky shortcuts some people are tempted to take.

Responsible AI Writing Workflow vs. Risky Shortcuts

| Step | Responsible Hybrid Workflow (Recommended) | High-Risk Automated Workflow (Not Recommended) |

|---|---|---|

| 1. Ideation | Use AI for brainstorming; you guide the topic and angles. | Ask AI to "write an article about X" with little direction. |

| 2. Drafting | AI generates a first draft based on your detailed outline. | AI generates the entire text in one go. |

| 3. Refinement | You perform a deep rewrite, adding personal stories, unique analysis, and expert insights. | Run the AI text through a "humanizer" tool and call it done. |

| 4. Fact-Checking | You manually verify all claims, stats, and sources. | Trust the AI-generated information is accurate. |

| 5. Final Review | Edit for voice, tone, flow, and grammatical accuracy. | Do a quick spell-check, at best. |

| Outcome | High-quality, original content that reflects your expertise and is highly unlikely to be flagged. | Generic, error-prone content that is easily flagged by detectors and readers alike. |

Ultimately, the responsible path not only produces better, more authentic work but also protects your reputation. The shortcuts might seem faster, but they come with significant risks that simply aren't worth it.

The Verdict: Is "Undetectable AI" a Myth?

So, what's the final word? After digging into the tech and looking at how these tools actually perform, the idea of a truly "undetectable AI" feels more like a marketing promise than a reality. It's largely a myth.

Sure, some AI humanizers can occasionally slip past basic detectors. But they just don't hold up against the more advanced systems being used today. The fundamental issue is that AI detection technology is always evolving. It's a constant game of cat and mouse; as soon as a rewriter learns to hide one AI signature, the detectors get an update to spot a new one.

When you weigh the risks—a failing grade, a Google penalty, or even a rescinded job offer—the convenience of taking a shortcut with a humanizer just doesn't seem worth it. So, does undetectable AI work? Not reliably. And certainly not reliably enough to bet your career or reputation on.

Our final recommendation is to embrace a human-centric approach. Use AI as a powerful tool to augment your creativity and productivity, not to replace your voice and critical thinking.

This is the only strategy that makes sense for the long haul. To really get the most out of AI, you need to understand the technology that powers it all: Natural Language Generation (NLG).

Think of AI as your creative partner. It can help you brainstorm ideas, build a solid outline, or get a first draft on the page. But you're still in the driver's seat. It's your job to weave in your own expertise, personal stories, and unique voice. That's how you create authentic, high-quality work that will always pass scrutiny, making the whole detection debate a non-issue.

Frequently Asked Questions

It's natural to have questions when you're wading into the world of AI-generated content and detection. Let's tackle some of the most common ones that come up when people ask, "does undetectable AI really work?"

Can Turnitin Detect Content from Undetectable AI Tools?

More often than not, yes. In our own testing, we found that content run through these "humanizer" tools still gets flagged by sophisticated detectors like Turnitin over 60% of the time. This is especially common when the original text was 100% generated by AI.

Here's the thing: academic integrity software isn't just looking at word choice. It’s built to spot the faint but persistent statistical fingerprints that AI leaves behind. These tools analyze deeper patterns in sentence structure and logical flow, which often feel "off" even after a rewrite.

Is It Illegal to Use Undetectable AI?

While you probably won't face criminal charges, using these tools is almost guaranteed to break some other serious rule.

For students, it’s a direct violation of academic integrity policies. The consequences can be steep, from a failing grade to outright expulsion. For writers and marketers, it's a breach of platform terms of service, especially Google's, which is laser-focused on authentic, helpful content. It's also crucial to understand how to avoid plagiarism, because a sloppy paraphrase—human or AI—can land you in hot water.

The consequences are real, even if they aren't legal ones. Relying on these tools can damage your academic standing, professional reputation, and search engine rankings, making the risk far greater than any potential reward.

Will Using an AI Humanizer Hurt My SEO?

It definitely can. Google’s entire system is designed to reward content made for people. Their algorithms are looking for signs of experience, expertise, authoritativeness, and trustworthiness (what they call E-E-A-T).

If a "humanizer" spits out text that’s clunky, factually wrong, or just doesn't help the reader, your rankings are going to take a hit. Good, long-term SEO isn't about fooling an algorithm. It's about using AI as a collaborator to create genuinely useful information that serves your audience.

Ready to turn your drafts into clear, polished, and genuinely human-sounding content? Rewritify is designed to help you refine your writing—whether it started with AI or your own ideas—to meet the highest standards of quality and originality. Try it now and see what a difference a smarter rewrite can make. Find out more at https://www.rewritify.com.

Relevant articles

Discover the best AI writing detector to ensure content authenticity. We tested and ranked 12 top tools for accuracy, false positives, and specific use cases.

Learn how to pass AI detection by ethically humanizing AI-generated drafts. Our guide covers rewriting, editing, and using tools to create authentic content.

Learn how to detect AI generated content with this complete guide. We cover AI detectors, linguistic clues, and manual verification to spot AI writing.

Learn a responsible workflow for using a plagiarism checker and rewriter. Create original, high-quality content that maintains your voice and boosts SEO.

Looking to rewrite my essay for free? Discover 12 top tools and methods for improving clarity, originality, and flow without spending a dime.

Learn how to make AI writing undetectable. Our guide shows you how to transform robotic AI drafts into authentic, human-quality content that bypasses detectors.