Does Turnitin Check for AI and How Does It Actually Work?

Does Turnitin check for AI? Yes. We explain how its detection works, why false positives happen, and how to use AI ethically to protect your academic integrity.

So, does Turnitin check for AI-generated content? The short answer is yes, but it’s far from a simple yes-or-no situation. The technology has some important nuances and limitations that both students and educators really need to wrap their heads around.

Think of it as a powerful new tool in the academic integrity toolbox, but definitely not an infallible judge.

Understanding Turnitin AI Detection

With the explosion of AI writing tools, Turnitin rolled out a specific detector that operates completely separately from its classic plagiarism checker. The plagiarism tool works by scanning a paper for text that matches existing sources in its massive database—books, articles, websites, and other student papers. It's a matching game.

The AI detector, on the other hand, works more like a linguistic analyst. It doesn't look for matches. Instead, it scrutinizes the writing itself, searching for the subtle patterns and statistical fingerprints that tend to show up in machine-generated text.

This distinction is crucial. Plagiarism is about finding copied content. AI detection is about identifying the style of the writing.

Here’s a simple analogy:

- Plagiarism Check: This is like a detective checking for stolen goods by comparing serial numbers against a list of stolen items. It's looking for an exact match.

- AI Detection: This is more like a handwriting expert analyzing a note to determine if the author was a person or a machine trying to imitate human handwriting. It's all about form, predictability, and style.

This technology is moving fast. By July 2023, just a few months after its launch, Turnitin had already scanned over 65 million papers. The results were fascinating: they found that over 10% of those papers contained at least 20% AI-generated writing. You can dig into the early findings on Turnitin's AI detector to see the data for yourself.

Accuracy and False Positives

Turnitin states its AI detection model has a 98% accuracy rate, but there’s a really important trade-off to understand here. To keep the rate of "false positives"—that is, incorrectly flagging human writing as AI-generated—below 1%, the system is designed to be conservative.

What does that mean in practice? It means the system will sometimes let AI-written text slide by on purpose to avoid wrongly accusing a student.

The system is calibrated to accept a 15% "false negative" rate. This means it’s intentionally designed to miss some AI-generated content to protect students from false accusations. This makes it clear that an AI score is meant to be an indicator, not definitive proof.

Understanding this balance is everything. The tool is built to start a conversation between an instructor and a student, not to end one with an automatic penalty.

Here's a quick rundown of the key stats and features.

Turnitin AI Detection at a Glance

This table breaks down what Turnitin's AI detection tool does, its claimed accuracy, and the important numbers to keep in mind.

| Feature | Statistic or Detail |

|---|---|

| Primary Function | Identifies text likely generated by AI models (like ChatGPT). |

| Stated Accuracy | Claims over 98% accuracy for detecting AI-written content. |

| False Positive Rate | Maintained at less than 1% to minimize wrongful accusations. |

| False Negative Rate | Intentionally set at ~15% to prioritize avoiding false positives. |

Ultimately, these numbers show that while the detector is a sophisticated tool, it's deliberately imperfect to protect the innocent.

How Turnitin’s AI Detection Actually Works

Think of Turnitin’s AI detector less like a plagiarism cop and more like a linguistic forensic analyst. It’s not looking for copied text. Instead, it’s trained to spot the subtle, almost invisible fingerprints that AI models leave all over their writing. The system learned its craft by analyzing a colossal library of text, some written by humans and some by AI, until it could reliably tell the difference.

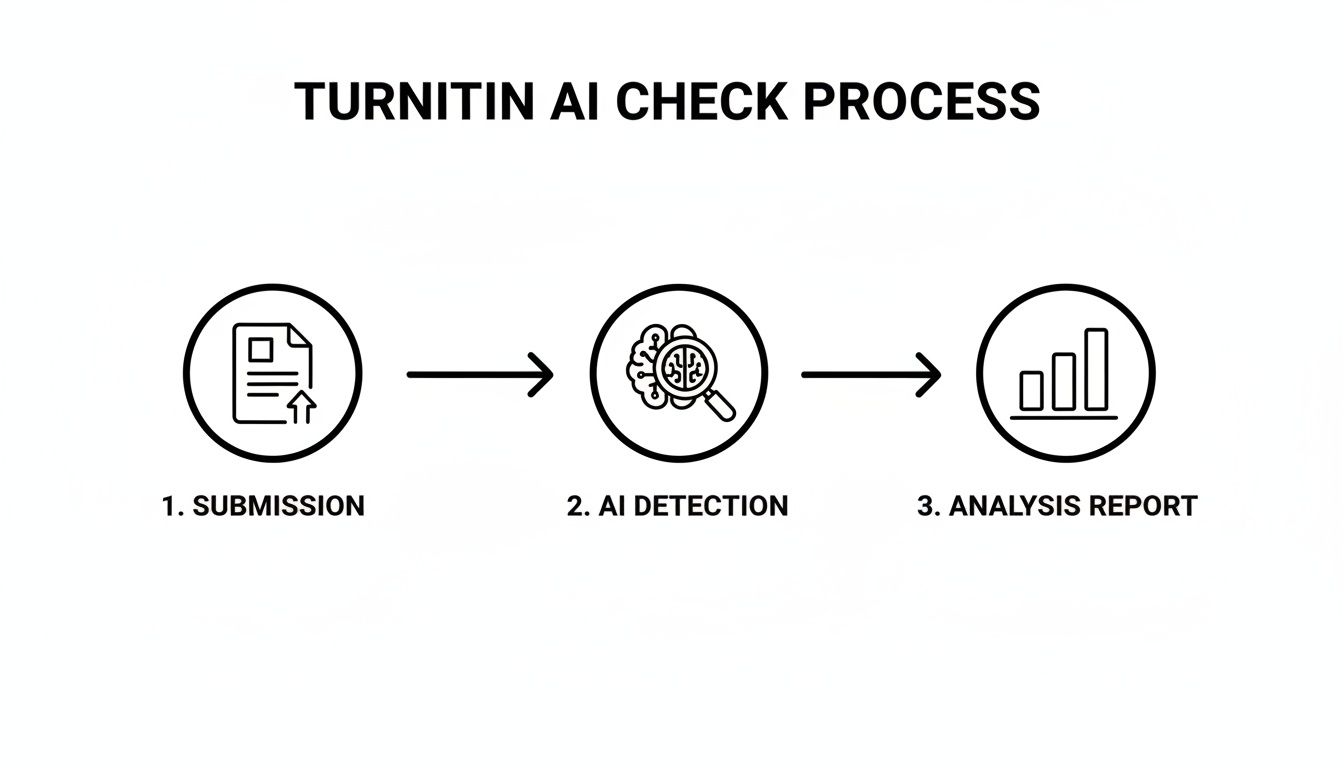

The moment you upload a paper, the process kicks off. Turnitin’s AI doesn't read the whole document at once; it slices it into smaller, manageable chunks—think sentences and paragraphs. This segmented approach is key, allowing the detector to scrutinize each piece of text individually for tell-tale signs of AI, a far more detailed method than a simple similarity report.

Once segmented, each chunk is put under the microscope and analyzed for specific linguistic patterns. To really get what it's looking for, it helps to have a sense of understanding the underlying GPT models that drive tools like ChatGPT. The detector zeroes in on things like word choice predictability and sentence consistency—the hallmarks of machine-generated text.

This flowchart maps out the journey a paper takes from submission to the final analysis report.

As you can see, the system is built on a multi-stage evaluation that focuses on the characteristics of the writing itself, not just matching words from a database.

The Science of Predictability

One of the biggest giveaways of AI writing is its unnatural smoothness. Large language models (LLMs) are built to predict the most statistically likely word to come next, which often results in text that feels a bit too perfect, a little too sterile. This uncanny consistency is a massive red flag for the detector.

Turnitin's model essentially gives sentences a probability score. If the writing is too predictable, it gets flagged. Human writing, by contrast, is messier, more surprising, and full of variation.

Here’s a simple way to think about it: A human writer might use a quirky word, an odd turn of phrase, or even a slightly clunky sentence. An AI, on the other hand, almost always defaults to the most common, logical, and expected choice. Turnitin is trained to sniff out that lack of natural chaos.

Of course, this is all part of a bigger cat-and-mouse game. AI writing tools are getting better at sounding human every day. If you're curious about how this technological race is playing out, it's interesting to see how effective "humanizer" tools are. Our guide digs into this very topic and answers the question: https://www.rewritify.com/blog/does-undetectable-ai-work? This constant evolution is a reminder that while Turnitin’s technology is sophisticated, it's far from a perfect or finished system.

Decoding Your Turnitin AI Score

Seeing an AI score on your Turnitin report for the first time can be jarring. Before you panic, it's important to understand what that number actually represents. It’s not a definite "guilty" verdict for academic misconduct; it's a measure of the tool's confidence that some of your text might have been generated by a machine.

I like to compare it to a weather forecast. When a meteorologist predicts an 80% chance of rain, it doesn't mean it’s absolutely going to pour. It just means the conditions are ripe for it. A high Turnitin AI score works the same way—it suggests your writing shows patterns that look a lot like AI, which is a strong signal for your instructor to take a closer look.

Think of the score as a conversation starter, not the final word. It’s designed to flag text for a human review, not to make an accusation on its own.

What the Numbers Actually Mean

Your Turnitin report doesn't just give you a single percentage. It also highlights the exact sentences or paragraphs that its algorithm found suspicious. This is a genuinely helpful feature, as it shows both you and your instructor precisely which parts of your paper set off the detector.

Looking at these highlighted sections is the key to understanding your score. It’s not a blanket judgment on your entire paper but a running total of all those flagged portions.

The AI score is a probability, not proof. It's just one piece of data meant to help an educator make a judgment, not replace it. The assignment's context and a student's previous work are still the most important factors.

While every school might have its own guidelines, here’s a general way to think about the score ranges:

- 0%: Great news. The detector didn't find any text it considers AI-generated.

- 1-20%: This lower range could mean a few different things. It might pick up on heavy editing from a tool like Grammarly, or maybe a few sentences that were rephrased with AI assistance. It could also just be a false positive that an instructor can quickly dismiss.

- 21-100%: A score this high is a much stronger indicator that large chunks of your text were likely written by AI. This will almost certainly lead to a more serious review and a conversation with your instructor.

At the end of the day, this score is meant to be a constructive tool. For instructors, it helps spot potential problems. For students, seeing what gets flagged can help you understand what parts of your writing sound robotic, giving you a chance to develop a more authentic voice. It’s all about opening up a dialogue about what academic integrity looks like now that this technology is here.

The Critical Risk of False Positives

So, we know Turnitin checks for AI, but how reliable is that check? That’s where things get complicated. The single biggest fear with any AI detection tool is the false positive—that moment when a student’s completely original, human-written work gets incorrectly flagged as AI-generated. This isn't just a small technical error; it can spiral into a serious academic misconduct accusation.

These mistakes happen because AI detectors are trained to spot overly predictable, uniform, and almost "too perfect" writing patterns. The issue is, certain human writing styles can accidentally mirror those exact patterns, creating the perfect storm for a mistaken flag. This reality puts certain groups of students at a much higher risk of being falsely accused.

Who Is Most at Risk for False Positives?

Some writing styles just look more "robotic" to an algorithm, even when they're 100% human. Understanding what triggers these flags is crucial for both students and instructors who need to interpret AI scores with a healthy dose of skepticism.

Students who are often more likely to get a false positive include:

- Non-native English speakers: They frequently learn English in a very structured, formal way. The result can be prose that is grammatically perfect but stylistically simple, which an AI detector can easily misread.

- Neurodivergent individuals: Their natural writing processes and styles might not follow typical patterns, sometimes producing text that an algorithm misinterprets as machine-generated.

- Students in technical fields: They are trained to write with direct, precise, and often formulaic language. This style intentionally lacks the "creative chaos" that detectors often associate with human writing.

This isn't a problem with the student's writing at all—it's a fundamental limitation of the technology. The algorithm is just matching patterns; it has no way of understanding the human context behind the words.

The table below breaks down some common writing traits that, while perfectly normal for humans, can sometimes raise red flags for an AI detection tool.

| Factors That Can Increase False Positive Risk |

| :--- | :--- |

| Potential Trigger | Why It Can Be Misidentified as AI |

| Formulaic or structured writing | AI models excel at generating predictable sentence structures, which can look similar to writing from someone following a strict academic template. |

| Simple vocabulary and sentence structure | Non-native speakers or writers aiming for clarity might use simpler language, which algorithms can mistake for the less nuanced output of an AI. |

| Lack of personal voice or idioms | Technical or formal academic writing often discourages a strong personal voice, making the text appear more neutral and, to a machine, more AI-like. |

| Grammatically perfect text | While a good goal, text with zero grammatical errors can sometimes be flagged because AI-generated content is often flawless in its construction. |

It's important to remember that these are just potential triggers. The presence of one or even all of these factors doesn't mean a paper was written by AI, but it helps explain why a false positive might occur.

Conflicting Data on Accuracy

The debate over the accuracy of these tools is fueled by wildly different claims. Turnitin states its false positive rate is less than 1% for papers with over 20% AI writing. However, independent research tells a very different story.

A widely cited Washington Post study, for example, found much higher error rates. In some of their smaller tests, AI detectors showed false positive rates hovering around 50%. This massive gap highlights just how much of a problem this is and raises serious questions about trusting these tools without question. You can read more about the challenges of AI detection accuracy to see the full scope of the issue.

An AI detection score should never be treated as irrefutable proof of academic misconduct. It is an indicator, a single data point that requires human judgment, context, and a conversation with the student before any conclusions are drawn.

At the end of the day, this technology is still in its infancy. An AI score can be a useful signal for an educator, perhaps prompting them to take a closer look at a student's submission. But it can never, ever replace the critical role of human oversight. The final call must always come down to a holistic review that considers the student’s past work, their writing process, and direct communication.

How to Use AI Ethically in Your Academic Work

Let's be realistic: AI tools aren't going anywhere. Student adoption has exploded, with recent studies showing that nearly 50% of college students now use AI tools on a monthly, weekly, or even daily basis. What’s even more telling is that a staggering 75% say they’ll keep using them even if their school bans them.

This isn't just a fleeting trend. The conversation has to shift from "if" students will use AI to "how" they can use it responsibly. The secret is to think of these tools as a learning assistant, not a ghostwriter. Instead of asking AI to write your entire essay, treat it like a brainstorming partner to kick things off.

You can use it to spitball initial ideas, sketch out a basic outline, or even simplify a dense topic that’s making your head spin. This way, you stay in control, and the final paper reflects your own critical thinking and unique voice.

Frame AI as a Support Tool

The most ethical way to approach AI is for support, not substitution. The goal is to boost your understanding and make you more productive, not to outsource the hard work of thinking and writing. When your final paper is truly your own, the whole "does Turnitin check for AI" question becomes a lot less scary.

Here are a few practical ways to use AI as a responsible study partner:

- Idea Generation: Try prompts like, “Give me five potential arguments about…” to get the ball rolling.

- Outline Creation: Ask for a simple essay structure on your topic to help organize your thoughts.

- Concept Clarification: Use a prompt like, “Explain [complex theory] to me like I'm a beginner.”

It's also smart to understand how different tools work. For example, knowing how AI note takers for summarizing content operate is useful, since they also create AI-generated text based on source material.

Develop Your Authentic Voice

At the end of the day, your best defense against a false positive is simply developing your own distinct writing style. AI-generated text often feels a bit flat and generic because it’s based on statistical patterns. Human writing, on the other hand, is full of personality—it has its own unique quirks, rhythms, and word choices.

By focusing on developing your own authentic voice, you not only improve your academic skills but also naturally create work that is difficult for any algorithm to misidentify. Your personal style is a powerful buffer.

This means that even if you use an AI tool for some initial brainstorming, the final product should always sound like you. When you take the time to rewrite, analyze, and combine ideas in your own words, you’re doing more than just avoiding detection. If you need help refining an AI-generated draft, our guide on how to rewrite AI-generated text can show you how to inject your own voice back into the work.

More importantly, you’re building the critical thinking skills that are the whole reason you're in school in the first place.

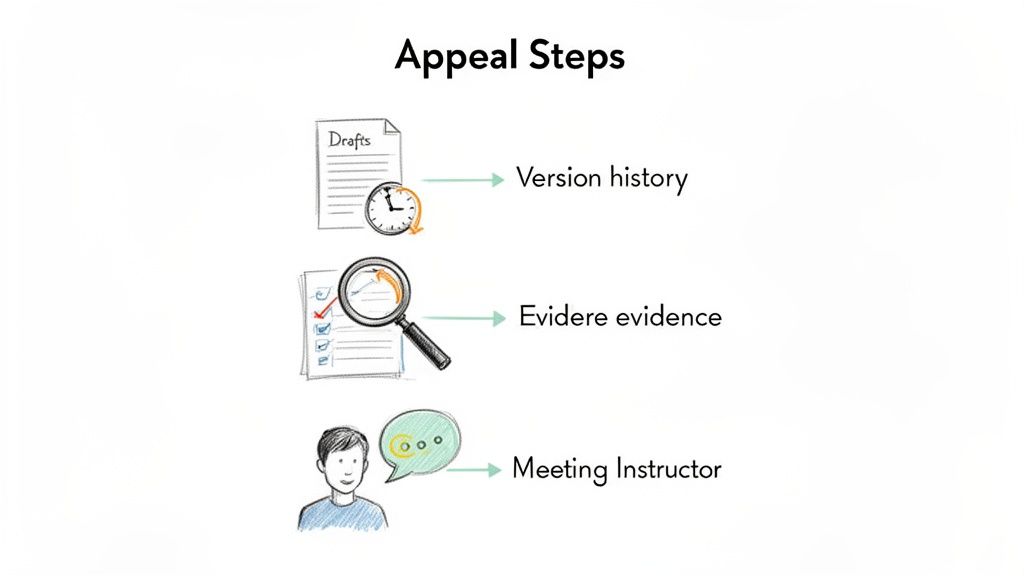

What to Do If You're Falsely Accused

It’s an incredibly stressful situation: being accused of academic misconduct when you know you did the work yourself. If a Turnitin AI flag puts you in this spot, the first thing to do is take a breath. Stay calm and approach the problem methodically. Remember, an accusation is not a conviction.

Before you do anything else, calmly pull up your institution's academic integrity policy, specifically looking for rules on AI use. These policies can differ wildly from one school to another, so knowing the exact rules is your crucial first step. This isn't just about being right; it's about being prepared for a productive, informed conversation.

Gather Your Evidence

Your next move is to build a case that proves your writing process was authentic. The good news is that the tools we use for writing often leave a detailed digital trail of our work. This trail is your best defense.

Start pulling together every piece of proof you can find. Think about things like:

- Version History: If you use Google Docs or Microsoft 365, you have a goldmine. Their version history feature shows every single edit, from the first sentence on a blank page to the final period.

- Outlines and Drafts: Did you save any early outlines, messy first drafts, or brainstorming notes? These are perfect for showing how your ideas took shape.

- Research Notes: Your browser history, saved articles, highlighted PDFs, or even handwritten notes can demonstrate the research that fueled your writing.

The goal here is to prove ownership of your intellectual labor. When you present a clear timeline of how your paper came to be, you change the conversation from being about a single AI score to being about your genuine writing journey.

Prepare for a Constructive Conversation

Once you have your evidence organized, it's time to request a meeting with your instructor. Try to frame this as a chance to clarify your process, not a confrontation. Your goal is to walk them through your work.

Show them your version history. Explain your workflow. Point to your outlines and talk about how your ideas evolved from one draft to the next. A transparent, cooperative approach shows your commitment to academic honesty. It’s important to remember that the question of does Turnitin check for AI is still new territory, and many educators are navigating how to interpret these tools. A calm, evidence-based discussion is almost always the best path to resolving a false positive.

For more strategies on upholding academic integrity in your work, check out our guide on how to avoid plagiarism.

Got Questions About Turnitin and AI? We've Got Answers.

Even after getting the big picture, a lot of specific questions pop up. Let's tackle some of the most common ones that students and educators are asking about Turnitin's AI detection.

Can Turnitin Actually Catch GPT-4 and Other Top Models?

Yes, it can. Turnitin's AI detector isn't built to hunt for one specific AI model. Instead, it’s trained to spot the statistical fingerprints that nearly all large language models (LLMs)—including heavy hitters like GPT-4, Claude, and Gemini—leave behind.

Think of it this way: The system isn't looking for a particular author's name; it's looking for a specific style of writing that's common to machines. Because it focuses on how the text is constructed, not just what it says, it stays relevant even as AI models get smarter.

Will Using Grammarly Get Me Flagged for AI?

For the most part, no. Using a tool like Grammarly for its core purpose—fixing typos, catching grammar mistakes, and correcting punctuation—is just fine. That’s editing, not AI generation.

Where you might run into trouble is with advanced "rewrite" or "rephrase" features that change entire sentences for you. If the tool is doing the heavy lifting and restructuring your work, it could start to look more like machine-generated text. The line is really between getting a little help and having a machine do the writing.

What if I Rewrite AI Content Myself? Can Turnitin Still Detect It?

This really comes down to how much you rewrite it. If you're just swapping out a few words here and there (the ol' thesaurus trick), the underlying AI sentence structure and patterns will likely still be there for the detector to find.

But, if you use the AI-generated text as a starting point for ideas and then write the entire thing from the ground up—using your own voice, your own sentence structures, and your own way of explaining things—you'll be in the clear. The detector is sniffing out AI writing patterns, not AI-inspired thoughts.

The best way to avoid a flag is simple: be the author. When you truly process the information and put it into your own unique words, your writing won't have the mathematical predictability that AI detectors are built to catch.

Do Students Get to See Their AI Detection Score?

That's entirely up to your instructor or school. Some teachers make the AI score visible right in the Similarity Report because they believe in transparency. Others prefer to keep it private, using it as an internal signal to take a closer look at a submission.

There's no one-size-fits-all rule here, so your best bet is to check your course syllabus or your university's academic integrity policy to see how they handle it.

Ready to make sure your writing is polished and genuinely yours? Rewritify can help you refine your drafts and any AI-generated text into clear, original content that reflects your own style. Our Undetectable mode is designed to enhance your work while keeping your unique voice front and center. Get started for free at Rewritify.com.

Relevant articles

Are AI detectors accurate? This guide unpacks the data, revealing why 99% claims are misleading and how to navigate the risks of false positives in your work.

Explore turnitin similarity score meaning and how it reflects originality, citation practices, and steps to improve your paper before submission.

Learn how to check for plagiarism with confidence. Our guide covers the best tools, manual techniques, and a clear workflow to ensure your content is original.

Difference between paraphrasing and plagiarism explained with clear examples and actionable tips to protect academic integrity.

Learn how to paraphrase without plagiarizing using expert techniques. Master ethical rewriting and proper citation to create truly original content.

Learn how to avoid plagiarism with proven strategies. Our guide covers proper citation, paraphrasing, and using tools to ensure your work is original.