Are AI Detectors Accurate Uncovering the Real Truth

Are AI detectors accurate? This guide unpacks the data, revealing why 99% claims are misleading and how to navigate the risks of false positives in your work.

So, you're wondering: Are AI detectors accurate?

Let’s get right to it. The short answer is no, at least not in the way their marketing would have you believe. They can sometimes offer a helpful hint, but their reliability is all over the map. Accuracy can swing wildly based on which AI model wrote the text, how complex the subject is, and whether a human has edited the content at all.

The High Stakes of AI Detection

This isn’t just a technical debate. The verdict from an AI detector can have serious, real-world consequences for students, writers, and businesses. A wrong call isn't a simple mistake; it can lead to false accusations, a trashed reputation, and a lot of wasted time and energy.

Think about a student whose original, thoughtfully written essay gets flagged as AI-generated, suddenly putting their academic future on the line. Or imagine a freelance writer losing a client because their genuine work was incorrectly flagged by a faulty tool. The stakes are incredibly high, which is why we have to get real about what these tools can and can't do.

The Two Sides of Inaccuracy

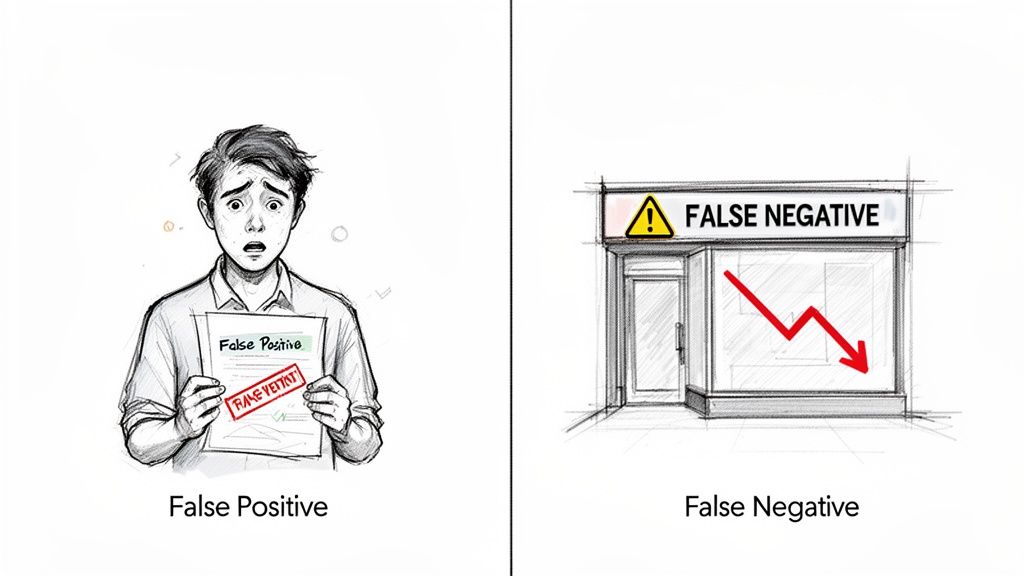

When these detectors fail, they fail in two critical ways, each with its own nasty fallout.

- False Positives: This is the big one for individuals. It’s when a detector wrongly flags human-written text as AI-generated. This is what leads to those unfounded accusations of cheating or plagiarism in both school and the workplace.

- False Negatives: This happens when AI-generated text slips past the detector, masquerading as human work. For publishers and businesses, this means low-quality, generic content might get published, which can hurt search engine rankings and damage a brand's credibility.

At their core, these tools aren't giving a definitive "yes" or "no." They're making a statistical guess based on patterns. As AI models get scarily good at mimicking human writing, those guesses get a whole lot less reliable.

This guide will give you a deep, evidence-based look at how these detectors work, why their bold accuracy claims often crumble under pressure, and what you can do to navigate this messy new reality.

First, let's compare what the marketing materials promise versus what independent research has found.

AI Detector Accuracy Claims Versus Reality

The gap between advertised accuracy and real-world performance is often massive. The table below breaks down what vendors claim compared to what independent studies have actually observed.

| Content Type | Advertised Accuracy | Observed Accuracy | Primary Risk |

|---|---|---|---|

| General Content | Often 98-99% | As low as 53% in some tests | High rate of false positives/negatives, making it unreliable for any critical decision. |

| Pure AI-Generated Text | Up to 99% | Generally high (90%+), but drops with paraphrasing or new models. | A false sense of security; these tools are easily bypassed with humanization techniques. |

| Human-Written Text | Claims <1% false positives | Can reach 7-10% false positives, especially in technical or academic writing. | Innocent writers being wrongly accused of academic or professional misconduct. |

| Mixed or Paraphrased Text | Often high (e.g., 96%) | Extremely low; detection can fall to near-zero with effective paraphrasing. | Undetected AI content impacting SEO, academic standards, or a company's brand voice. |

As you can see, once human editing or paraphrasing enters the picture, the reliability of these detectors falls off a cliff. This is a critical point to remember, as almost all professionally used AI content has some level of human involvement.

How AI Detectors Work and Why They Often Fail

To really get why the answer to "are AI detectors accurate?" is so often a firm "no," you have to pop the hood and see how they think. Think of an AI detector not as a plagiarism checker comparing text to a database, but more like a linguistic detective. It’s trained to spot the subtle, almost subconscious giveaways of machine-generated writing.

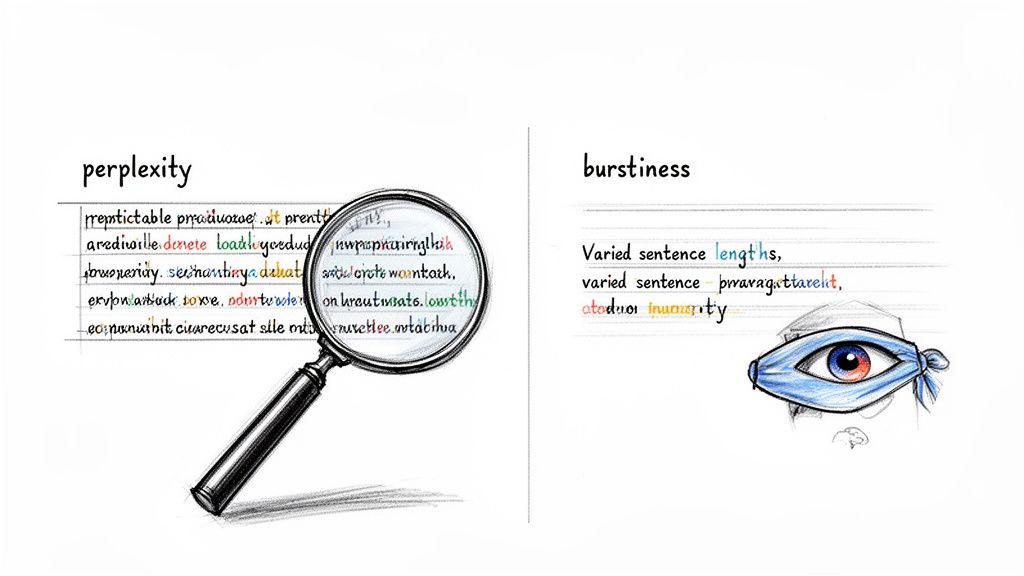

This detective work boils down to looking for two main clues: perplexity and burstiness. Once you understand these two concepts, you'll see exactly why these tools can be so easy to fool.

The Predictability Problem: Perplexity

Perplexity is really just a fancy way of measuring how predictable a piece of writing is. Human writing is wonderfully messy and unpredictable. We use weird idioms, unexpected word choices, and sentences that meander. AI models, by their very nature, are trained to pick the most statistically likely word to come next, which creates text that’s smooth and logical but often lacks any real spark of surprise.

For example, a sentence like, “The morning air was crisp as I sipped my coffee,” has low perplexity. It’s a common, expected phrase. A human, however, might write something like, “The morning air bit at my cheeks, a stark counterpoint to the steamy comfort of my coffee.” That second sentence has much higher perplexity because the phrasing and word choices are less common.

Key Takeaway: AI detectors are designed to flag text with consistently low perplexity, assuming that its high predictability is the signature of a machine. The problem is, this often penalizes clear, straightforward human writing—especially in technical or academic fields where precise, standard language is not just common, but required.

This means that while the detector is on the hunt for robotic text, it can easily mistake a human expert for a machine. This is a fundamental vulnerability and a huge reason why their accuracy is so questionable.

The Rhythm Problem: Burstiness

Right after perplexity, the next thing our detective looks for is burstiness. This is all about the rhythm and variation in sentence length and structure. Think about how you naturally write—you probably mix short, punchy sentences with longer, more descriptive ones. This creates a varied, engaging flow.

AI models, particularly older ones, often fall into a trap of creating text with an unnervingly consistent rhythm. The sentence lengths are all too similar, the structures too repetitive. This lack of variation, or low burstiness, is another dead giveaway for a detector.

- High Burstiness (Human): Short sentence. Then a much longer, more descriptive one that elaborates on the previous point, offering new details. Another short one for impact.

- Low Burstiness (AI): A sentence of medium length is here. Another sentence of similar length follows. A third sentence of roughly the same structure appears.

This uniformity practically screams AI. But the game is always changing. Newer AI models are getting much better at faking human-like burstiness, and even a light edit by a human can completely erase this AI fingerprint. To get a better sense of how AI models function and why they produce flawed or predictable text, it's worth understanding how brands are dealing with imperfect AI and hallucination rates.

The Cat-and-Mouse Game of Detection

Here’s the real kicker: AI detection is an inherently reactive technology. The detectors are always trained on the output of existing AI models. So, the moment a new, more advanced language model hits the market, every detector out there is instantly playing catch-up.

They're stuck in a relentless cat-and-mouse game they can't win. An AI detector fine-tuned to spot the patterns of GPT-3.5 will almost certainly struggle to identify the far more nuanced text generated by GPT-4 or Claude 3.

This constant evolution means any tool's claimed accuracy is just a snapshot in time, only relevant for the specific AI models it was trained on. For a closer look at the methods involved, you might find our guide on https://www.rewritify.com/blog/how-to-detect-ai-generated-content insightful. Ultimately, the blistering pace of generative AI development all but guarantees that any detection method based on yesterday's technology is destined to become obsolete.

What Independent Studies Say About AI Detector Accuracy

You've probably seen the bold claims plastered across AI detector websites: 98% or even 99% accuracy. Those numbers sound impressive, but they tell a very specific, and often misleading, story. They typically come from tests run in a perfect lab environment, scanning pure, unedited text straight from an AI.

The moment you bring these tools into the real world, that shiny accuracy starts to tarnish.

The gap between marketing hype and reality is so wide it has even drawn the attention of regulators. The FTC, for instance, filed a complaint against one company that had to settle allegations of falsely marketing its tool as '98 percent' accurate. When tested independently on general content, its actual accuracy was a dismal 53%—barely better than a coin flip. If you want to dig into the details, you can explore the specifics of AI detection accuracy here.

This really drives home a critical point: an accuracy claim without context is just a number, and a pretty meaningless one at that.

Decoding the Data: What the Research Shows

When you look at academic studies and third-party tests, a much more realistic picture emerges. Researchers consistently find that when these tools face a mix of content—like human writing, paraphrased AI text, or content from newer AI models—those high accuracy scores plummet.

A huge red flag is the rate of false positives, which is when the tool wrongly accuses a human of using AI. Even premium tools that aim for a low false positive rate of 1-2% can cause massive problems at scale. Think about it: at a large university, a 1% error rate could still mean thousands of students are incorrectly flagged for academic misconduct every year.

And that's for the good tools. Many free or lower-quality detectors have alarmingly high false positive rates, sometimes flagging over 10% of human-written work as AI-generated.

One of the most consistent findings across multiple studies is this: while many detectors are pretty good at catching untouched AI text, their performance crumbles the second a human gets involved. A little paraphrasing or a few simple edits can be enough to completely bypass the system.

This is a massive weakness, especially since most people who use AI responsibly are doing exactly that—using AI as a starting point and then editing, refining, and adding their own expertise.

The Problem with Scores and Benchmarks

Another tricky part of this is how accuracy is even measured. A tool's documentation might mention an "AUC score," but that's just jargon to most people. An AUC score (Area Under the Curve) basically measures how well a model can tell the difference between two things—in this case, human vs. AI writing.

A perfect score is 1.0, while 0.5 means the tool is just guessing. Many tools that boast high overall accuracy turn out to have much lower AUC scores in independent evaluations, which tells you they struggle with any text that isn't black and white.

On top of that, AI models are evolving so quickly that any accuracy benchmark is outdated almost as soon as it's published. A detector trained to recognize patterns from GPT-3.5 might be completely clueless when it sees text from a newer model like GPT-4 or Claude 3. This creates a constant cat-and-mouse game where the detectors are always a step behind.

Even established academic integrity tools like Turnitin aren't immune to these challenges, though they are often carefully tuned to minimize false accusations. To learn more, you can check out our guide on how Turnitin handles AI detection.

Ultimately, the message from independent research is loud and clear: take the results from any AI detector with a huge grain of salt. They aren't a definitive verdict. At best, they're a weak signal that should never, ever be the only reason for making a serious decision.

The Key Factors That Influence AI Detector Accuracy

An AI detector’s accuracy score isn't a fixed, reliable number. It’s more like a moving target, with its performance shifting dramatically based on a handful of critical factors. Understanding these is the key to knowing when a detector is most likely to fail—and why you should always take their results with a healthy dose of skepticism.

Honestly, the question "are AI detectors accurate?" can only be answered by looking at the context. Without that, any accuracy claim you see is practically meaningless.

The Impact of the AI Model

The specific Large Language Model (LLM) that created the text is the first huge variable. Detectors are trained to spot the unique patterns and statistical fingerprints of existing AI models. But when a new, more advanced model like GPT-4 or Claude 3 hits the scene, it writes in a much more sophisticated, human-like way.

This means detectors trained on older models like GPT-3.5 are instantly playing catch-up. They're looking for outdated tells and often completely miss the more polished prose of a newer generator. It's like a detective who only knows how to look for old, muddy boot prints when the new suspect is wearing clean sneakers.

The Game-Changing Effect of Paraphrasing

Perhaps the single biggest thing that demolishes detector accuracy is human editing or the use of paraphrasing tools. Even simple changes—rewording sentences, swapping out a few words, or adjusting the flow—can completely erase the statistical patterns that these detectors are built to find.

This is where those bold 99% accuracy claims completely fall apart. A tool might be pretty good at spotting raw, unedited AI output, but its effectiveness plummets the moment a human touches it.

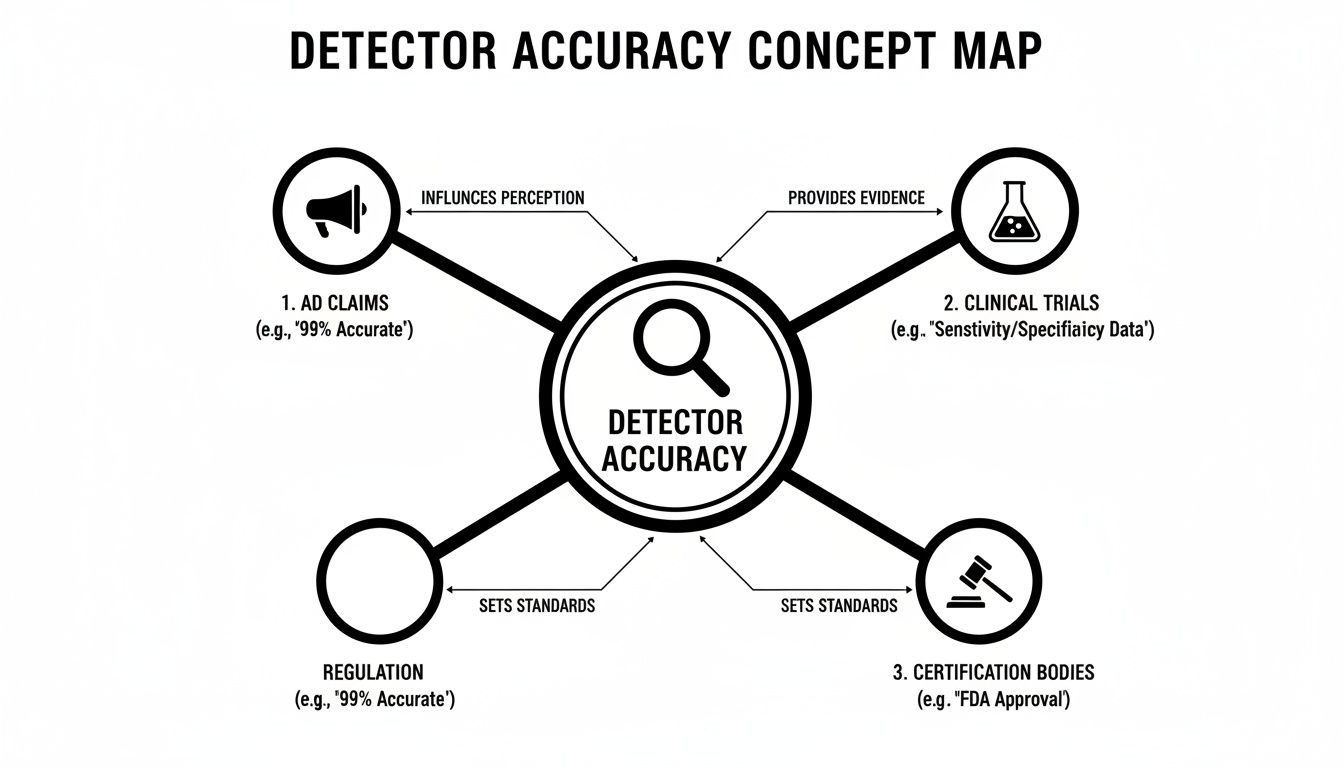

This concept map shows how detector accuracy is tangled up with external factors like marketing claims, academic studies, and emerging regulations.

The map drives home the point that accuracy isn't just a simple technical metric. It's a complex issue sitting at the intersection of technology, business, and policy.

And the effect isn't trivial. While companies like GPTZero claim high accuracy on pure AI text, research shows that paraphrasing can slash detection rates from a near-perfect 99.52% down to an abysmal 0.02%. This vulnerability is a crucial reason why third-party studies often expose just how fragile these systems are, especially against modern "humanizer" tools. You can read more about these AI accuracy benchmarks to see the data for yourself.

The Role of Text Length and Complexity

The nature of the text itself plays a huge role. Generally, AI detectors perform much better on longer pieces of content, like full articles or essays. This gives them a bigger sample size to work with, making it easier to spot those recurring, non-human patterns in word choice and sentence structure.

But they often struggle mightily with shorter texts. For things like product descriptions, email subject lines, or social media posts, there just isn't enough text for the detector to make a confident call. This leads to wildly inaccurate results, including a much higher chance of flagging short, human-written content as AI.

Likewise, highly technical or academic writing can trip up detectors. This kind of content often uses precise, standardized language that can accidentally mimic the statistical patterns of AI-generated text, leading to the incorrect flagging of an expert human’s work.

Why the Content's Domain Matters

Finally, a detector's accuracy is heavily dependent on the domain of the content it was trained on. A tool trained mostly on a massive dataset of news articles and general web content might be reasonably good at spotting AI-generated text in those same areas.

But what happens when you ask it to analyze something completely different?

- Academic Papers: The structured, formal language can look very "AI" to a detector that hasn't seen much academic writing.

- Marketing Copy: Punchy, persuasive language follows different rules that can easily fly under the radar.

- Creative Writing: Poetry or fiction uses unpredictable, figurative language that can confuse a detector entirely.

If the tool hasn't been trained on a diverse enough range of writing styles, its accuracy will be all over the place. This training bias is a hidden weakness that makes a universal, one-size-fits-all AI detector a very unreliable proposition.

The High Stakes of Inaccuracy: Real-World Consequences

The question "are AI detectors accurate?" isn't just a technical debate—it's about real people and real livelihoods. The flaws in these tools have profound, often damaging, consequences. An inaccurate score isn't a simple data point; it's a verdict that can derail a career, jeopardize a student's future, or silently chip away at a brand's hard-won reputation.

When an AI detector gets it wrong, the fallout is anything but abstract. It's a student getting an email accusing them of cheating on an essay they spent weeks writing. It's a freelance writer losing a contract because their original work was incorrectly flagged. These are the human stories behind a technology that is still fundamentally unreliable.

This impact really boils down to two types of failure, and each brings its own flavor of chaos.

The False Positive Nightmare for Individuals

A false positive is when a detector mistakenly labels human-written text as AI-generated. This is the scenario that ignites personal and professional crises, forcing innocent people to prove their own authenticity against a machine's judgment.

Picture a university student who has poured their soul into a thesis, only to have a tool like Turnitin flag it. All of a sudden, they're presumed guilty and have to defend themselves against an algorithm. The emotional stress and potential academic penalties are just massive.

- Academic Jeopardy: Students can face suspension, expulsion, or a permanent black mark on their record, all from one faulty scan.

- Professional Damage: Writers, journalists, and other professionals can find their credibility shattered overnight, leading to lost clients and tarnished careers.

- Creative Suppression: The fear of being falsely accused can actually stifle creativity. It pushes writers toward simpler, blander language that’s less likely to trigger a detector.

And this isn't some rare fluke. One comprehensive study of over 14,400 abstracts found that AI detectors can produce up to 8% false positives on writing confirmed to be human. While no tool is perfect, that error rate is far too high for making high-stakes decisions. You can read the full research about these detection challenges to see for yourself.

The False Negative Risk for Businesses

On the other side of the coin, a false negative is when AI-generated text slips right past a detector, getting the all-clear as human work. For businesses and publishers, this is a more subtle threat, one that causes slow but significant damage over time.

When a company unknowingly publishes low-quality, generic AI content, it risks undermining its entire marketing strategy. Search engines like Google are all about rewarding content that shows experience, expertise, authoritativeness, and trustworthiness (E-E-A-T). Undetected AI content often falls completely flat on these measures.

The real danger of false negatives isn't a single bad article. It's the slow, cumulative erosion of a brand's voice, authority, and connection with its audience, leading to lost trust and declining organic traffic.

This risk shows up in a few key areas for a business:

- SEO Penalties: Content that lacks originality and depth gets down-ranked by search algorithms, torpedoing visibility and traffic.

- Brand Dilution: Relying too much on generic AI text makes a brand's voice sound robotic and impersonal, which pushes customers away.

- Eroding Trust: If your audience starts to feel your content is inauthentic or unhelpful, they lose faith in the brand itself, hitting loyalty and sales hard.

Whether it’s the immediate crisis of a false positive or the slow burn of a false negative, the consequences of inaccurate AI detection are severe. Getting through this requires a smart, informed approach that always puts human oversight and genuine quality first.

How to Navigate the AI Detection Maze

So, with AI detectors being so wildly unreliable, how do you actually use AI without constantly looking over your shoulder? The secret isn’t finding some magic tool to trick the system. It's about a fundamental shift in mindset—away from fearing detection and toward a process that guarantees your content is original, valuable, and genuinely high-quality.

It's time we all stopped treating detector scores as the gospel truth. Think of them as a minor, often faulty, signal. A high "AI score" isn't a guilty verdict; it's just a nudge for a human to take a closer look. Nothing more.

Adopt a Proactive Workflow

The best strategy is to get ahead of the problem instead of just reacting to it. A modern, responsible content workflow doesn't shun AI; it embraces it as a powerful assistant at the right stages. This proactive approach gives you a repeatable process for creating excellent work, every single time.

Here’s what that looks like in practice:

Step 1: Ideate and Draft with AI: Use generative AI as your creative springboard. It’s brilliant for brainstorming outlines, kicking around ideas, and spitting out a rough first draft. This is a massive time-saver and a fantastic cure for writer's block.

Step 2: Refine and Rewrite with Tools: With that raw material in hand, it's time to polish. Advanced rewriting and paraphrasing tools are built to help you clean up clunky sentences, improve clarity, and make the writing sound more natural. This is how you bridge the gap between a robotic draft and a fluid, human-sounding final piece. If you're curious, you can learn more about how undetectable AI tools operate.

Step 3: Inject Your Unique Expertise: This is the non-negotiable part. A real person needs to go through the text with a fine-tooth comb. This is where you add your unique voice, your hard-won insights, and your personal stories. You fact-check every claim, weave in specific examples, and ensure the content delivers real value that no AI could ever dream of creating on its own.

When you focus on creating verifiably great work, the whole question of AI detection becomes moot. The goal isn't just to pass a detector; it's to produce something so good, so insightful, and so clearly yours that no one would ever second-guess its origin.

This simple three-step process—Draft, Refine, and Finalize—does more than just sidestep the pitfalls of AI detection. It helps you create objectively better content. You're combining the raw speed of AI with the irreplaceable depth and authenticity that only a human can provide. By making this your go-to method, you can scale up your content creation confidently, without ever compromising on quality.

A Few Lingering Questions About AI Detector Accuracy

Even after digging into the nuts and bolts of how AI detectors work, a few common questions always seem to pop up. Let's tackle them head-on so you have the full picture of what these tools can—and can't—do.

Can Any AI Detector Be 100% Accurate?

In a word: no. Getting to 100% accuracy is pretty much impossible, both technically and in practice. These tools don't give you a definitive "yes" or "no" answer. They work on probabilities, calculating the likelihood that a piece of text was written by AI based on its linguistic fingerprint.

Think of it this way: as AI models get better at sounding human, complete with creative quirks and even mistakes, the line between our writing and theirs gets fuzzier. It's a constant game of cat and mouse, and the detectors are always playing catch-up with the latest AI generators.

This is why you should always see a detector's score as a starting point for a closer look, not the final word. It's a single piece of evidence, not the whole truth.

Ultimately, perfect accuracy will always be a moving target that we'll likely never hit.

Does Paraphrasing Guarantee a Pass?

Not quite, but it gets you close. Those advanced paraphrasing tools, sometimes called "humanizers," are built specifically to scramble the very patterns that AI detectors are trained to find. They mix up sentence structures and word choices, which can often drop the detection probability way down, sometimes near zero.

But here’s the catch: no tool can promise a 100% guarantee that it will fool every detector out there, especially not the ones that will be developed tomorrow. The smartest approach is to use a paraphraser to clean up a first draft, then roll up your sleeves and do a proper human review. That's where you add your unique voice, your own insights, and your own facts to make the content genuinely yours.

Are Paid Detectors More Reliable Than Free Ones?

Generally speaking, you get what you pay for. Paid detectors are usually a bit more sophisticated, relying on more complex models and getting more frequent updates to keep pace with new AI writing tools. They also tend to offer more detailed reports.

But don't assume a high price tag automatically means high accuracy.

- Real Limitations: Independent studies have shown time and again that even the top-shelf, paid tools have some serious blind spots.

- The False Positive Problem: Many still have a worryingly high rate of false positives, incorrectly flagging human-written text as AI-generated.

- Easy to Bypass: Most can still be tricked with some clever paraphrasing or a bit of human editing.

The bottom line? Don't let the price guide you. The only way to know if a tool works for you is to test it against your own content and see how it performs based on real, independent data.

Ready to turn your AI drafts into polished, original content that truly connects? Rewritify helps you refine your text to sound authentically human, ensuring clarity and originality while sidestepping unreliable detectors. Try Rewritify for free and experience the difference.

Relevant articles

Explore turnitin similarity score meaning and how it reflects originality, citation practices, and steps to improve your paper before submission.

Learn a responsible workflow for using a plagiarism checker and rewriter. Create original, high-quality content that maintains your voice and boosts SEO.

Learn how to check for plagiarism with confidence. Our guide covers the best tools, manual techniques, and a clear workflow to ensure your content is original.

Does Turnitin check for AI? Yes. We explain how its detection works, why false positives happen, and how to use AI ethically to protect your academic integrity.

Difference between paraphrasing and plagiarism explained with clear examples and actionable tips to protect academic integrity.

Learn how to paraphrase without plagiarizing using expert techniques. Master ethical rewriting and proper citation to create truly original content.